The emergence of Large Language Models(LLMs) is redefining our interaction with technology. Generations of these models have existed at the topmost levels of artificial intelligence—from human-like text generation to supporting complex tasks. But how do I create a LLM by myself? This guide is going to help walk you through the process of building a Large Language Model. By the end, you should have a pretty solid idea of what LLMs are, the different types that exist, and the process involved with creating one.

What is a LLM?

A Large Language Model (LLM) is an artificial intelligence model capable of comprehension and generation of human language. A Large Language Model analyzes and generates text relative to massive text data after being taught such patterns, grammar, and meanings of words and sentences. Once you input something into an LLM, it processes your input and responds in a way that one would consider very ‘human.’ LLMs find applications in AI chatbots, language translation, and content generation.

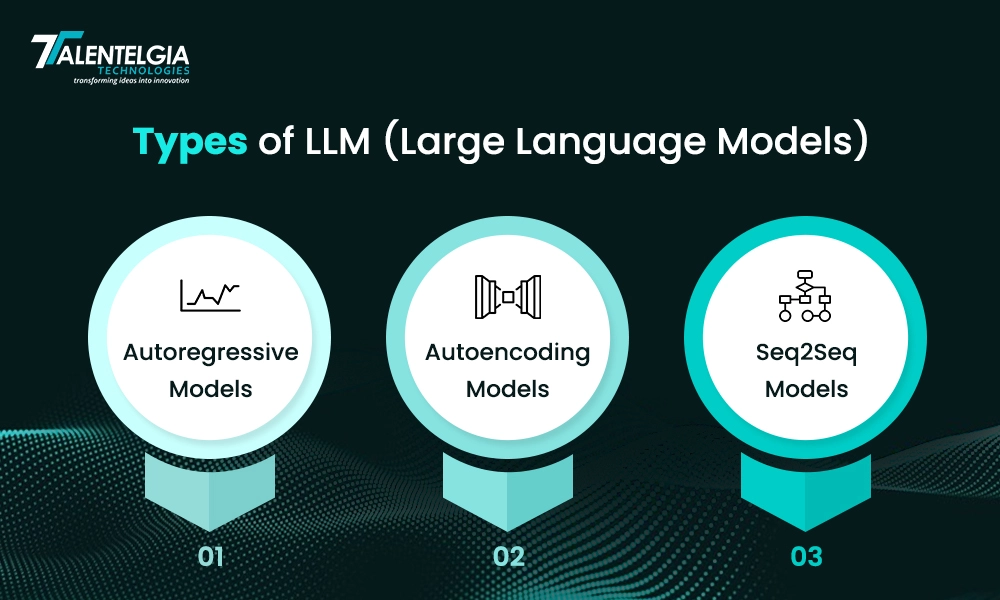

Types of LLM (Large Language Models)

Language Learning Models (LLMs) are developed for various distinct purposes, and they differ accordingly. There are many types of LLM but the 3 major ones include the following:

- Autoregressive Models: They are the models that try to guess the continuation of a sentence given the context of some preceding words. All GPT models belong to this category and are useful for a variety of tasks, one of which primarily is text generation.

- Autoencoding models: These models learn how to reproduce the original input through a corrupt version. A popular variant of this model is BERT, which is used in sentence classification and translation tasks.

- Seq2Seq models: These models consist of a sequence as input and another sequence as output. This makes them very useful in translation and summarization tasks.

Understanding these types will help you choose the right model for your needs when learning how to create a LLM.

Build Your Own LLM : Design Model Architecture

The first, and foremost, step in creating a LLM is to get your model’s architecture designed. The design represents how the model is going to be structured in terms of the number of connected layers. One important feature bonds the architecture of the Transformer to LLMs, i.e., the Transformer can handle difficult language tasks.

Creating the Transformer’s Components

The Transformer model has two main components: the encoder and the decoder.

- Encoder: the encoder maps input text into vectors, presenting the content of the text. It is of a multi-layered structure, where each layer of the model aims at capturing a different aspect of input.

- Decoder: Given the encoded information, decoders, combined with the encoder, produce coherent and contextually meaningful responses.

Joining the Encoder and Decoder

The next learning step for developing a LLM is to join the encoder and decoder. The encoder receives the input data, while the decoder produces the output data. The two components have to be joined to constitute a complete language model that can both comprehend and generate text.

Combining the Encoder and Decoder to Complete the Transformer

You are supposed to attach the encoder and decoder to complete the Transformer. In that you have to set up the layers and how they are connected to ensure that the input data move through correctly from the input to the output, and eventually, the last model should be capable of processing everything like an input sentence via the encoder and a reply through the decoder.

Training Custom LLM

Training in a Large Language Model is the most crucial stage in building one. This is training a model on a large corpora of text data and varying its parameters to minimize the number of errors it makes. At times, the training procedure requires heavy advanced computation, possibly leading to powerful hardware and long timespans.

How Long Does It Take to Train an LLM from Scratch?

Training an LLM from scratch can take anywhere from some days to weeks—the number of weeks being large—depending on its size and the quantity of data. The process further requires the availability of powerful GPUs or TPUs to handle the computations. It is further vital to monitor and adjust the training process to make sure the model is learning as desired.

Key Elements of LLM

Before jumping into how to create an LLM, a user needs to first understand the key elements that form a Large Language Model.

- Data: While training an LLM, two of the most important factors are the quality and quantity of data, which impact performance. A good dataset is required for an LLM to learn all the distinguishing features, syntax, semantics, and contextual use of a language. The data used should be diverse in its text types so that it gets exposure to different linguistic structures and contents. This may involve books, articles, academic papers, websites, and many more. All these types of text contribute very differently to the training process. For instance, books may add much narrative and description, while articles may contribute more succinct, fact-based information. Websites may contribute informalities and broaden the range of represented topics. Such text variety will help an LLM generalize to diverse forms of text in a very effective way, improving understanding and generation of human-like texts across a wide spectrum.

- Architecture: The architecture will define how information is processed by the model. The most common architecture in LLMs is transformers, due to their architecture’s ability to deal with long-range dependencies within the text. The architecture of LLM plays a very important role in how the information is processed and learns the data.

- Training: This would be the process of tuning the parameters of the model with minimal errors. This is achieved through backpropagation, where the model learns from its mistakes.

- Evaluation: Upon training your model, one needs to test the same to ensure it performs well with all the intended tasks, this can be achieved by testing it on some datasets or comparing the output with human-generated text.

LLM Training Techniques

Learning to design an LLM, you’ll run into different methods of training, like:

Supervised Learning

Supervised learning is a basic concept in machine learning wherein the model undergoes training using labeled data. Labeled data in this case means a dataset whereby every input is associated with a corresponding correct output. For instance, in tasks like language translation or summarization, supervised learning works very well. Take the case of a language translation: a model supervised by a huge corpus of sentences in two languages, each being annotated or labeled with a correct translation in the other, is trained to recognize a pattern for translating new sentences from one language to another.

The main advantage of supervised learning is its accuracy since the model is trained based on correct examples. On the other hand, this method requires quite considerable volumes of high-quality labeled datasets, the creation of which can be very time-consuming and effort-consuming, let alone expensive.

Unsupervised Learning

It represents another paradigm where models learn from data without explicit labels. Rather than being driven by distinct input-output pairs, the model seeks to learn underlying patterns, structures, or relationships in the data itself. Therefore, it has significant applications in cases when labeled data is scarce or when one intends to explore the intrinsic structure of a dataset.

In natural language processing, unsupervised learning is often used to pick up the structure of a language without explicit guidance. For example, on text generation tasks, it learns from large amounts of unlabeled text the general patterns, grammar, and vocabulary of a language. Thus, it can generate coherent and contextually appropriate text even though it has never been trained explicitly on particular examples.

Unsupervised learning is also critical in the case when general patterns or clusters need to be learned from the data.

Transfer Learning

Transfer learning is how experiences learned in solving one problem can improve performance on another related but different problem. It avoids training a model from scratch. Instead initializes training with a model whose weights are pre-trained on some large dataset for some other problem. Fine-tuning this model on a task-specific dataset by tuning only the last few layers provides good results for the model at hand.

Transfer learning revolutionized NLP, first with the advent of large pre-trained models such as GPT and BERT. Pre-training of these huge models takes place on vast text corpora. The first advantage of transfer learning is efficiency. Since it was pre-trained to learn general patterns of a language, fine-tuning requires less data and time when training. This is especially useful when dealing with smaller datasets or using limited computational resources.

Reinforcement Learning

Reinforcement learning is a very different approach from the usual machine learning paradigm. Here the model learns from its interaction with the environment through trial and error. This feedback, usually in the form of rewards or penalties, influences the model toward better performance over time. The model aims at maximizing the cumulative rewards by learning an optimal sequence of actions in different situations.

One of the main reasons reinforcement learning in NLP is very useful is in those tasks that require models to make decisions based on user interactions. For example, while developing chatbots, reinforcement learning helps in making improvements in responses by learning from the feedback of users. If a chatbot response results in a good user experience, it gets a reward and hence continuation of such behavior. In case of an unsatisfactory response, the model gets penalized and will try other alternative actions.

Conclusion

Building an effective and powerful LLM can be both time- and resource-consuming. Completing all the processes, from designing the architecture of the model down to assembling components and, finally, training it, comprises the essence of developing an efficient and powerful model. This whole procedure is crucial for developing a model that can understand and generate human-like text. While such a model is time- and resource-intensive to create, recent advances in AI development have further innovated in natural language processing and certainly make this journey more feasible than ever. Ultimately, the benefits of developing a robust LLM outweigh the challenges in offering transformative potential across a wide array of applications. We hope that we you have got answers to all your queries related to creating an LLM model.

Healthcare App Development Services

Healthcare App Development Services

Real Estate Web Development Services

Real Estate Web Development Services

E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce Development Company

Blockchain E-commerce Development Company

Fintech App Development Services

Fintech App Development Services Fintech Web Development

Fintech Web Development Blockchain Fintech Development Company

Blockchain Fintech Development Company

E-Learning App Development Services

E-Learning App Development Services

Restaurant App Development Company

Restaurant App Development Company

Mobile Game Development Company

Mobile Game Development Company

Travel App Development Company

Travel App Development Company

Automotive Web Design

Automotive Web Design

AI Traffic Management System

AI Traffic Management System

AI Inventory Management Software

AI Inventory Management Software

AI Software Development

AI Software Development  AI Development Company

AI Development Company  AI App Development Services

AI App Development Services  ChatGPT integration services

ChatGPT integration services  AI Integration Services

AI Integration Services  Generative AI Development Services

Generative AI Development Services  Natural Language Processing Company

Natural Language Processing Company Machine Learning Development

Machine Learning Development  Machine learning consulting services

Machine learning consulting services  Blockchain Development

Blockchain Development  Blockchain Software Development

Blockchain Software Development  Smart Contract Development Company

Smart Contract Development Company  NFT Marketplace Development Services

NFT Marketplace Development Services  Asset Tokenization Company

Asset Tokenization Company DeFi Wallet Development Company

DeFi Wallet Development Company Mobile App Development

Mobile App Development  IOS App Development

IOS App Development  Android App Development

Android App Development  Cross-Platform App Development

Cross-Platform App Development  Augmented Reality (AR) App Development

Augmented Reality (AR) App Development  Virtual Reality (VR) App Development

Virtual Reality (VR) App Development  Web App Development

Web App Development  SaaS App Development

SaaS App Development Flutter

Flutter  React Native

React Native  Swift (IOS)

Swift (IOS)  Kotlin (Android)

Kotlin (Android)  Mean Stack Development

Mean Stack Development  AngularJS Development

AngularJS Development  MongoDB Development

MongoDB Development  Nodejs Development

Nodejs Development  Database Development

Database Development Ruby on Rails Development

Ruby on Rails Development Expressjs Development

Expressjs Development  Full Stack Development

Full Stack Development  Web Development Services

Web Development Services  Laravel Development

Laravel Development  LAMP Development

LAMP Development  Custom PHP Development

Custom PHP Development  .Net Development

.Net Development  User Experience Design Services

User Experience Design Services  User Interface Design Services

User Interface Design Services  Automated Testing

Automated Testing  Manual Testing

Manual Testing  Digital Marketing Services

Digital Marketing Services

Ride-Sharing And Taxi Services

Ride-Sharing And Taxi Services Food Delivery Services

Food Delivery Services Grocery Delivery Services

Grocery Delivery Services Transportation And Logistics

Transportation And Logistics Car Wash App

Car Wash App Home Services App

Home Services App ERP Development Services

ERP Development Services CMS Development Services

CMS Development Services LMS Development

LMS Development CRM Development

CRM Development DevOps Development Services

DevOps Development Services AI Business Solutions

AI Business Solutions AI Cloud Solutions

AI Cloud Solutions AI Chatbot Development

AI Chatbot Development API Development

API Development Blockchain Product Development

Blockchain Product Development Cryptocurrency Wallet Development

Cryptocurrency Wallet Development About Talentelgia

About Talentelgia  Our Team

Our Team  Our Culture

Our Culture

Healthcare App Development Services

Healthcare App Development Services Real Estate Web Development Services

Real Estate Web Development Services E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce

Development Company

Blockchain E-commerce

Development Company Fintech App Development Services

Fintech App Development Services Finance Web Development

Finance Web Development Blockchain Fintech

Development Company

Blockchain Fintech

Development Company E-Learning App Development Services

E-Learning App Development Services Restaurant App Development Company

Restaurant App Development Company Mobile Game Development Company

Mobile Game Development Company Travel App Development Company

Travel App Development Company Automotive Web Design

Automotive Web Design AI Traffic Management System

AI Traffic Management System AI Inventory Management Software

AI Inventory Management Software AI Software Development

AI Software Development AI Development Company

AI Development Company ChatGPT integration services

ChatGPT integration services AI Integration Services

AI Integration Services Machine Learning Development

Machine Learning Development Machine learning consulting services

Machine learning consulting services Blockchain Development

Blockchain Development Blockchain Software Development

Blockchain Software Development Smart contract development company

Smart contract development company NFT marketplace development services

NFT marketplace development services IOS App Development

IOS App Development Android App Development

Android App Development Cross-Platform App Development

Cross-Platform App Development Augmented Reality (AR) App

Development

Augmented Reality (AR) App

Development Virtual Reality (VR) App Development

Virtual Reality (VR) App Development Web App Development

Web App Development Flutter

Flutter React

Native

React

Native Swift

(IOS)

Swift

(IOS) Kotlin (Android)

Kotlin (Android) MEAN Stack Development

MEAN Stack Development AngularJS Development

AngularJS Development MongoDB Development

MongoDB Development Nodejs Development

Nodejs Development Database development services

Database development services Ruby on Rails Development services

Ruby on Rails Development services Expressjs Development

Expressjs Development Full Stack Development

Full Stack Development Web Development Services

Web Development Services Laravel Development

Laravel Development LAMP

Development

LAMP

Development Custom PHP Development

Custom PHP Development User Experience Design Services

User Experience Design Services User Interface Design Services

User Interface Design Services Automated Testing

Automated Testing Manual

Testing

Manual

Testing About Talentelgia

About Talentelgia Our Team

Our Team Our Culture

Our Culture

Write us on:

Write us on:  Business queries:

Business queries:  HR:

HR: