Data management is the core of any successful organization. It is what drives decisions, strategies, and innovations. And with businesses harnessing huge volumes of data from diverse sources, the need to have that flow freely and usable has been at the top of priorities. Processes such as data integration vs data ingestion come into play here, requiring clarity in approach and execution.

There are two aspects of data management that often appear in the conversation, especially when organizations look to streamline their workflows and maximize data utility. Although their emphasis differs, they are connected, working together to create a harmonious data ecosystem that supports operational efficiency and actionable insights.

In this blog, we’ll understand the basics of data integration and data ingestion, and the interplay between them. If navigating the complexities of data pipelines feels daunting, understanding this relationship is the key to building a robust and efficient data infrastructure.

What is Data Ingestion?

Data ingestion involves collecting data from multiple sources and transferring it into a centralized system for storage, analysis, and processing, such as a database or data warehouse. This data can originate from a variety of sources, including financial platforms, social media channels, IoT devices, SaaS applications, and many others.

The main purpose here is to clean and standardize data in a form that can be shared and applied by all users for different purposes in an organization. Centralization of data also helps business organizations make effective decisions while streamlining their work.

The ingested data can then be stored in specific-purpose repositories, including a data lake, data warehouse, data lakehouse, data mart, relational database, or even a document storage system. These repositories make data available to all applications, especially business intelligence and machine learning, predictive modeling, and artificial intelligence.

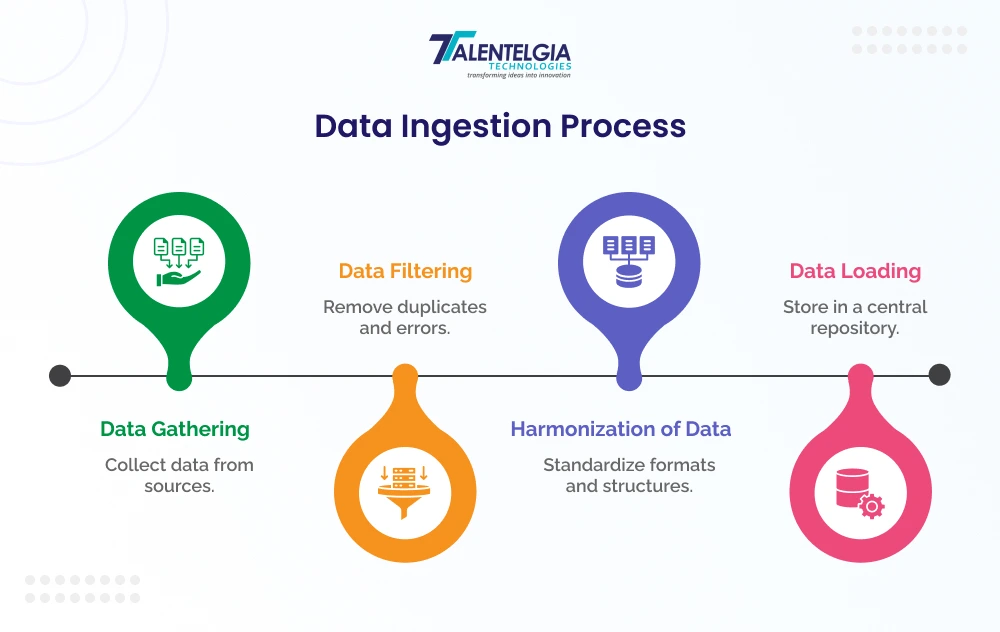

Data Ingestion Process

The data ingestion pipeline is structured in a particular sequence, making sure that data is gathered efficiently, processed, and then safely stored. Let’s go through each step to understand the process a bit better:

- Data Gathering

The initial step involves data-gathering processes from various sources, including advertising platforms, CRM systems, relational databases, and web pages. All the said sources generate a mix of both structured and unstructured data types.

- Data filtering

Once obtained, the data is filtered out of any irrelevant or duplicate information. Once it is confirmed that the data is free of any irregularities, it is passed on to the next stage.

- Harmonization of Data

The data gets standardized in the harmonization stage. Columns, formats, and types from various sources are applied to have a harmonized structure to easily combine and analyze the data.

- Data Loading

Finally, cleaned and harmonized data is transferred to a central repository, such as a data warehouse or data lake, to be ready for analysis, reporting, and other processing techniques.

Types of Data Ingestion

There are three primary approaches to Data Ingestion, suited for specific business requirements. Let’s dive into them:

- Batch Ingestion: This type of data ingestion involves the collection and processing of data in batches at scheduled intervals. The approach taken can be weekly, hourly, or even daily depending on the scenario. It is important to note that batch ingestion is usually ideal for non-urgent cases where real-time data is not that relevant but you need regular updates.

- Real-Time Ingestion: In this case, data is ingested as soon as it is generated. This ensures immediate access to real-time updates and is useful in scenarios where real-time analytics are required.

- Event-Based Ingestion: Data is ingested as a response to specific events including user actions like a customer making a purchase. This ensures efficient data processing by targeting only relevant information.

What Is Data Integration?

Data integration is the harmonization and alignment of data, gathered from different sources into one schema format. All data gathered in formats, structures, and locations get consolidated into one format with accessibility and utility for any kind of analysis, operational needs, and decision-making.

It is important to note that modern organizations gather data from different sources, including databases, applications, spreadsheets, APIs, cloud-based platforms, etc. It is then stored in different formats and structures across different locations which creates problems like data silos and inconsistency.

Data integration is aimed at overcoming these challenges through the consolidation of disparate data, transforming it into a common format, and making it easily accessible for business requirements.

While data ingestion focuses on bringing raw data into a repository, data integration goes a step further than that. Functions like harmonization and preparation of data for advanced workflows including visualizations, business intelligence, and analytics fall under data integration. This expanded scope makes it critical for achieving actionable insights and implementing informed decision-making.

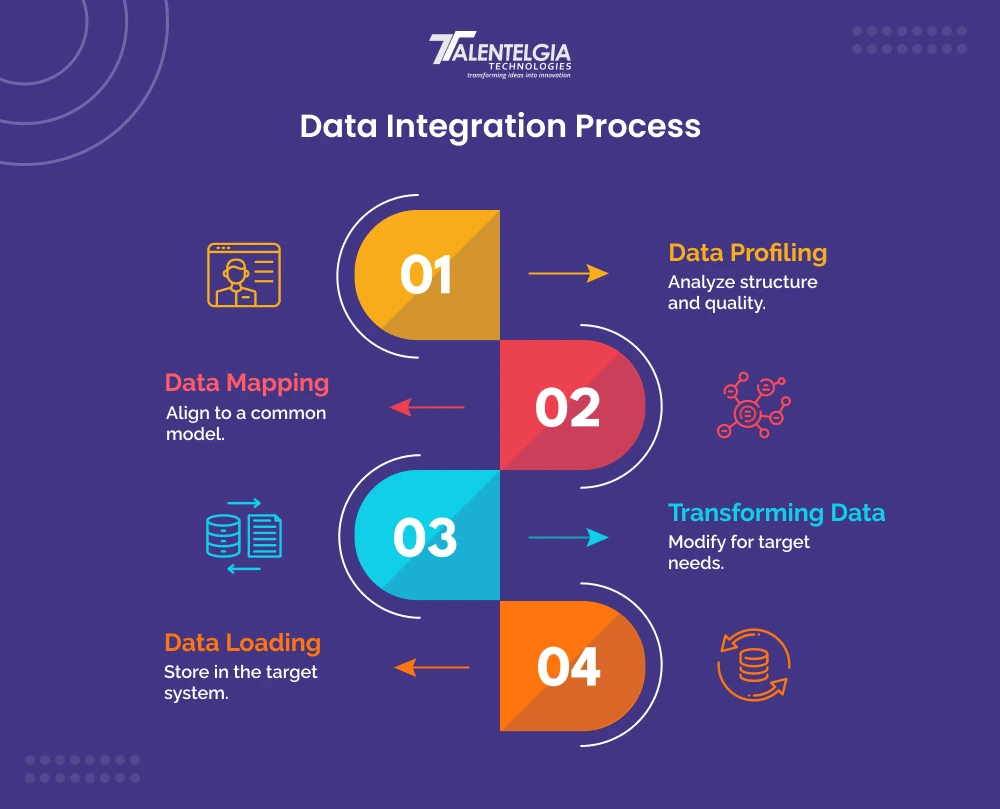

Data Integration Process

The data integration process involves several steps to ensure that data from various sources is combined, standardized, and prepared for use appropriately. The key steps involve:

- Data Profiling: At this stage, the data is analyzed to decode its structure and quality. This is done to remove any inconsistencies at later stages of data integration.

- Data Mapping: The data from different sources is mapped into a common data model, ensuring consistency in alignment with what is required for the target system. In this process, all the data elements are correctly matched and merged.

- Transforming Data: This involves transforming the data according to the target system’s specific needs. It can include cleaning, enriching, or even reformation to have it in the right structure for analysis or further processing.

- Data Loading: The transformed data is uploaded into the target system, which could be the data warehouse, a data lake, or any other repository. The same will then be stored and made readily available for analytical and operational uses post-transformation.

Types of Data Integration

The type of data integration architecture being used depends on varied business needs. Some common types include:

- Manual Integration: This involves directly integrating data using simple tools like spreadsheets and databases. Normally, it is time-consuming and prone to errors but would be useful in simple scenarios or small datasets.

- ETL (Extract, Transform, Load): This is one of the most widely used techniques for data integration. In ETL, the data is drawn out from different sources, structured in a compatible form through transformation, and then finally loaded into some target system, like a data warehouse. ETL is especially helpful for batch processing and processing big data.

- ELT (Extract, Load, Transform): Unlike ETL, ELT first performs the extraction and loading followed by transformation. This is useful for real-time data processing scenarios where constant information is required and that too with updated data.

- API Integration: API-based integration enables connecting different systems using application programming interfaces. This permits seamless transfers from one system to another and is very convenient in the integration of different cloud services or applications.

Also Read: Best Languages to Develop REST APIs

Data Integration Vs Data Ingestion: Main Differences

Here’s a table summarizing the key differences between Data Ingestion and Data Integration:

| Feature | Data Ingestion | Data Integration |

| Definition | The process of collecting and importing raw data from various sources into a data storage system. | The process of combining data from multiple sources into a unified view. |

| Focus | Collecting and storing data. | Combining, transforming, and cleaning data. |

| Data State | Raw, unstructured, or semi-structured data. | Cleaned, structured, and consistent data. |

| Granularity | Coarse-grained, focuses on large datasets. | Fine-grained, focuses on individual data elements. |

| Complexity | Less complex, often automated. | More complex, and involves data cleansing, ETL, and other processes. |

| Skill Requirement | Less specialized skills can be handled by data engineers or analysts. | Requires skilled data engineers or ETL developers. |

| Latency | Lower latency focuses on capturing data in real time. | Higher latency, as data transformation and cleaning take time. |

| Goal | Make data available for further processing and analysis. | Provide a unified, consistent view of data for better decision-making. |

Conclusion

When it comes to choosing between data integration vs data ingestion, the choice usually depends on the requirements of the organization and the specific phase of the data lifecycle being targeted. Data ingestion focuses on the collection of raw data from multiple sources, which essentially provides a basis for applying further processing or analysis. This step is crucial when you need to capture high-volume, real-time data or incorporate different sources like social media feeds, IoT devices, or cloud-based applications.

On the other hand, data integration is a step beyond ingesting data as it ensures that data coming from multiple sources is harmonized and cleaned in a way that transforms into a coherent format for analytical use or operation. This is necessarily required when the motive is to merge individual datasets to create a single integrated view that further finds its application in decision-making, predictive analytics, or reporting.

In general, data ingestion is the very initial step in the data pipeline. It will lay down the stage for effective data integration. Data integration enables to use of that data to give insights. The two processes are interlinked but carry out different functions but both are usually necessary for optimum performance because they complement each other in modern data management strategies.

For businesses that aim to capture real-time data, ingestion tools would be a must. However, organizations with complex analytics or reporting needs may require data integration to make sense of and leverage the ingested data correctly. Therefore, it is important to analyze your business needs and make the final choice accordingly.

Healthcare App Development Services

Healthcare App Development Services

Real Estate Web Development Services

Real Estate Web Development Services

E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce Development Company

Blockchain E-commerce Development Company

Fintech App Development Services

Fintech App Development Services Fintech Web Development

Fintech Web Development Blockchain Fintech Development Company

Blockchain Fintech Development Company

E-Learning App Development Services

E-Learning App Development Services

Restaurant App Development Company

Restaurant App Development Company

Mobile Game Development Company

Mobile Game Development Company

Travel App Development Company

Travel App Development Company

Automotive Web Design

Automotive Web Design

AI Traffic Management System

AI Traffic Management System

AI Inventory Management Software

AI Inventory Management Software

AI Software Development

AI Software Development  AI Development Company

AI Development Company  AI App Development Services

AI App Development Services  ChatGPT integration services

ChatGPT integration services  AI Integration Services

AI Integration Services  Generative AI Development Services

Generative AI Development Services  Natural Language Processing Company

Natural Language Processing Company Machine Learning Development

Machine Learning Development  Machine learning consulting services

Machine learning consulting services  Blockchain Development

Blockchain Development  Blockchain Software Development

Blockchain Software Development  Smart Contract Development Company

Smart Contract Development Company  NFT Marketplace Development Services

NFT Marketplace Development Services  Asset Tokenization Company

Asset Tokenization Company DeFi Wallet Development Company

DeFi Wallet Development Company Mobile App Development

Mobile App Development  IOS App Development

IOS App Development  Android App Development

Android App Development  Cross-Platform App Development

Cross-Platform App Development  Augmented Reality (AR) App Development

Augmented Reality (AR) App Development  Virtual Reality (VR) App Development

Virtual Reality (VR) App Development  Web App Development

Web App Development  SaaS App Development

SaaS App Development Flutter

Flutter  React Native

React Native  Swift (IOS)

Swift (IOS)  Kotlin (Android)

Kotlin (Android)  Mean Stack Development

Mean Stack Development  AngularJS Development

AngularJS Development  MongoDB Development

MongoDB Development  Nodejs Development

Nodejs Development  Database Development

Database Development Ruby on Rails Development

Ruby on Rails Development Expressjs Development

Expressjs Development  Full Stack Development

Full Stack Development  Web Development Services

Web Development Services  Laravel Development

Laravel Development  LAMP Development

LAMP Development  Custom PHP Development

Custom PHP Development  .Net Development

.Net Development  User Experience Design Services

User Experience Design Services  User Interface Design Services

User Interface Design Services  Automated Testing

Automated Testing  Manual Testing

Manual Testing  Digital Marketing Services

Digital Marketing Services

Ride-Sharing And Taxi Services

Ride-Sharing And Taxi Services Food Delivery Services

Food Delivery Services Grocery Delivery Services

Grocery Delivery Services Transportation And Logistics

Transportation And Logistics Car Wash App

Car Wash App Home Services App

Home Services App ERP Development Services

ERP Development Services CMS Development Services

CMS Development Services LMS Development

LMS Development CRM Development

CRM Development DevOps Development Services

DevOps Development Services AI Business Solutions

AI Business Solutions AI Cloud Solutions

AI Cloud Solutions AI Chatbot Development

AI Chatbot Development API Development

API Development Blockchain Product Development

Blockchain Product Development Cryptocurrency Wallet Development

Cryptocurrency Wallet Development About Talentelgia

About Talentelgia  Our Team

Our Team  Our Culture

Our Culture

Healthcare App Development Services

Healthcare App Development Services Real Estate Web Development Services

Real Estate Web Development Services E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce

Development Company

Blockchain E-commerce

Development Company Fintech App Development Services

Fintech App Development Services Finance Web Development

Finance Web Development Blockchain Fintech

Development Company

Blockchain Fintech

Development Company E-Learning App Development Services

E-Learning App Development Services Restaurant App Development Company

Restaurant App Development Company Mobile Game Development Company

Mobile Game Development Company Travel App Development Company

Travel App Development Company Automotive Web Design

Automotive Web Design AI Traffic Management System

AI Traffic Management System AI Inventory Management Software

AI Inventory Management Software AI Software Development

AI Software Development AI Development Company

AI Development Company ChatGPT integration services

ChatGPT integration services AI Integration Services

AI Integration Services Machine Learning Development

Machine Learning Development Machine learning consulting services

Machine learning consulting services Blockchain Development

Blockchain Development Blockchain Software Development

Blockchain Software Development Smart contract development company

Smart contract development company NFT marketplace development services

NFT marketplace development services IOS App Development

IOS App Development Android App Development

Android App Development Cross-Platform App Development

Cross-Platform App Development Augmented Reality (AR) App

Development

Augmented Reality (AR) App

Development Virtual Reality (VR) App Development

Virtual Reality (VR) App Development Web App Development

Web App Development Flutter

Flutter React

Native

React

Native Swift

(IOS)

Swift

(IOS) Kotlin (Android)

Kotlin (Android) MEAN Stack Development

MEAN Stack Development AngularJS Development

AngularJS Development MongoDB Development

MongoDB Development Nodejs Development

Nodejs Development Database development services

Database development services Ruby on Rails Development services

Ruby on Rails Development services Expressjs Development

Expressjs Development Full Stack Development

Full Stack Development Web Development Services

Web Development Services Laravel Development

Laravel Development LAMP

Development

LAMP

Development Custom PHP Development

Custom PHP Development User Experience Design Services

User Experience Design Services User Interface Design Services

User Interface Design Services Automated Testing

Automated Testing Manual

Testing

Manual

Testing About Talentelgia

About Talentelgia Our Team

Our Team Our Culture

Our Culture

Write us on:

Write us on:  Business queries:

Business queries:  HR:

HR: