Generative AI models are revolutionizing how we create, interact with, and innovate data by enabling machines to produce unique data independently. They are the latest innovation in machine learning and AI. According to Bloomberg, the Generative AI market will expand to $1.3 trillion over the next 10 years, from just $40 billion in 2022. This indicates the rising potential of generative AI across various industries as it takes on the new tech world.

But why is generative AI gaining so much importance? To understand this clearly, we will have to first understand generative AI as a concept followed by its types and its functioning.

Generative AI is a new-age AI system that can produce a wide array of outputs by using large datasets and user prompts. These tools can easily recognize patterns in which input is being given and can learn from the same. This includes reading images and content to generate similar outputs as and when required. This versatility allows Generative AI to be utilized across industries for either content creation, automation, or even problem-solving.

For instance, when it comes to software development and code generation, Generative AI tools like GitHub Copilot can assist developers by generating code snippets and suggesting relevant solutions. This enables AI developers to reduce the time taken to solve a particular coding issue or even assist in high-level problem-solving.

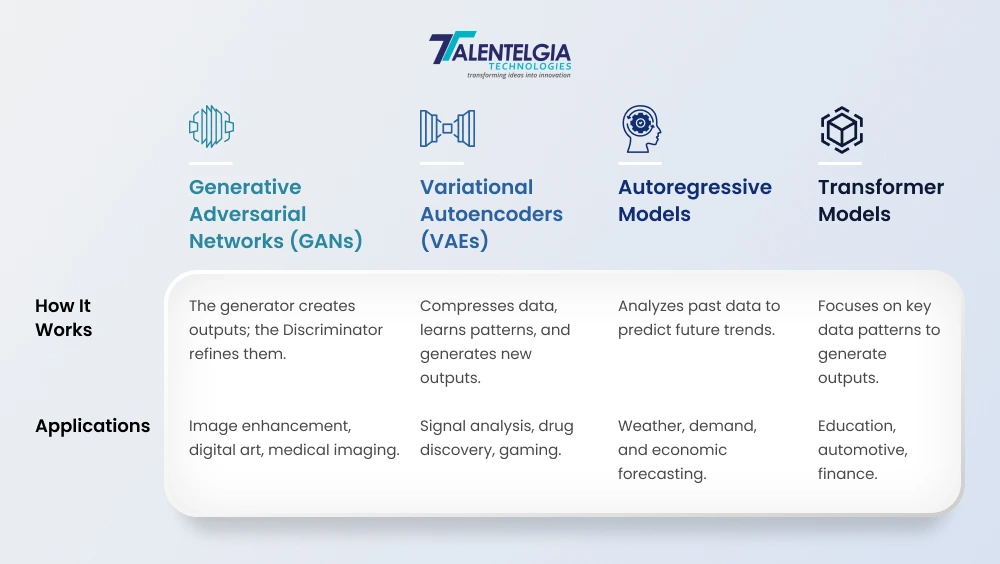

Types Of Generative AI

Generative Adversarial Network

GANs are made of two neural networks that work together to create realistic images or texts. The data creation part lies with the generator and the authenticity evaluation part lies with the discriminator. Let’s understand how it works better:

- The generator creates something from the input it receives. For instance, a person’s face or an animated photograph.

- The discriminator jumps in to check the authenticity of the out being generated.

- If the latter comes up with the conclusion that the output is fake or not up to the mark, the former refines the same and comes up with a new output.

Generative AI to Transform

Your Business. Our team of 150+ experts uses the Gen AI

models to develop tailored solutions

that meet your needs.

This process makes the generator’s output creation ability extremely precise, to the point where it’s not easy to tell whether the output is real or fake. GANs are therefore extremely powerful as they can interpret data quickly and learn from the same to create better output including images and texts.

Application: GANs are being utilized across various industries owing to their ability to generate high-quality, realistic data. Here are some common applications of GAN:

- Image generation and enhancement: GANs can be used to generate high-quality images quite easily. More so, it can even enhance an existing low-quality image. This makes its usage in fields like photo restoration mainly in media.

- Content Creation: Modern artists are now using GANs to generate interesting digital artwork that resonates with millions of individuals. GAN’s ability to generate realistic images makes it perfect for enhancing digital artwork based on prompts given by the artist.

- Medical Imaging: When it comes to healthcare, GANs generate artificial medical images that can help train diagnostic models easily. This proves to be useful for rare diseases for which real-world data is limited.

Variational Autoencoders

Variational Autoencoders are a type of machine-learning model that can generate new data by processing existing data in a particular manner. Let’s understand how it works:

- Encoding: The VAE takes in complex data, like text or even an image, and compresses it to generate a much simpler version. This easy-to-interpret version is called, ‘latent space.’ It consists of only the most vital components of the text or image.

- Decoding: Once the pattern is understood, the VAE reconstructs it back to its original form or a better version depending on what it has learned from the patterns. The output generated is now a recognizable image.

- Final Output: At this stage, the VAE generates entirely new data based on the patterns. Since the latent space has the most important features and patterns, it can help VAE generate similar images, sounds, or texts based on the same.

Applications: Here are some key applications of VAEs:

- Signal Analysis: VAEs are used to analyze various signals including the ones from IoT devices, and biological signals like EEG as they can help analyze patterns or any anomalies in the data.

- Drug Discovery: In the medical sector, variational autoencoders are used to generate molecular structures for drug discovery. They can assist in the discovery of new compounds or even advancements of existing ones.

- Gaming: VAE can help generate new characters, environments, or even game levels. This can ultimately help game developers enhance the gaming experience for the user and increase its popularity.

Autoregressive Models

Autoregressive models are experts in analyzing past events to predict future scenarios. Here’s how they work:

- Input Sequence: The process begins by sequencing past data. For instance, suppose you want to predict future stock prices. In this case, the model will analyze the stock prices of the past few days, weeks, or even months.

- Defining the pattern: The model then moves on to decode the patterns and correlations. In our case, it will try to find any patterns that stock prices are following or any correlations between market forces and stick prices.

- Predict the next Pattern: Based on the previous patterns, the autoregressor will now add the next sequence to the pattern. For instance, if the stock prices are going upward, it will predict that they will go further up.

Applications: While there are a lot of applications of these models, here are the three major applications that are in trend right now:

- Weather Forecasting: These models can easily predict any weather changes based on the pattern that is being followed. Moreover, other factors like humidity, wind speed, and others are also taken into account to determine the outcome.

- Demand Forecasting: A lot of businesses are now using autoregression models to predict future demand based on past sales data. This can help in inventory management.

- Economic Forecasting: Government and financial institutions are now using these models to predict future factors like GDP growth, inflation, recession, and other market trends.

Transformer Models

Transformer Models are new-age AI models that decode the relationships between patterns of data. Unlike conventional AI models that look at all the patterns in a particular data, transformer models consider only the most important information in the data, irrespective of its position in the sequence. Here’s how it works:

- Input Data: The AI model receives a data set and then breaks it down into parts that can be processed.

- Focus: The model then starts focusing on only the most important parts of the data and leaves out the rest of the information.

- Understanding: The model then learns about the patterns and builds a more decent understanding of the process.

- Final Output: Using these patterns, the model then generates a completely new output.

Application: Transformer Models are utilized in a variety of applications. Here are as few use cases of the same:

- Education: Transformer models can be used to assess essays thereby providing a more accurate grading system.

- Automobile: These models can extract data from autonomous vehicles to predict any potential failures.

- Financial Sector: Transformer models are particularly helpful in anomalies in financial transactions thereby predicting any fraudulent activity that might happen.

How Are Generative AI Models Trained?

When we talk about training Gen AI models, it is important to note that every step in the process counts. From selecting the right model architecture, gathering the right data sets, training the algorithms, and finally evaluating the model’s performance. Even if one step goes wrong, the entire model can malfunction and you won’t get optimal results. Let’s understand how every step works in the process of creating these Generative AI models:

Selecting the Right Model Architecture

The architecture of any Generative AI model is the most vital component. It is the basis that defines how the model will function, analyze the patterns, and generate output. Different outputs like images, texts, or audio require a completely different architecture to function properly. Once the right architecture is chosen, the model’s hyperparameters such as learning rate, batch size, etc are refined to enhance the overall performance. Therefore the right architecture and hyperparameter combination is mandatory for a generative AI model to function properly.

Data Collection and Preprocessing

Generative AI models need large, diverse datasets. Therefore, after creating a large dataset that gives a good representation of the task, it is important to clean the data and preprocess the same. This enhances its quality by removing some unwanted duplicates or corrupted data. It can also enhance the diversity of data using data augmentation to introduce some synthetic examples to present the model’s robustness.

with Generative AI . Looking for advanced AI solutions?

We’ve partnered with clients in 20+

countries.Connect with us today!

Training Process and Algorithm Optimization

When training the model, deciding on an appropriate loss function is important. The loss function for regression tasks is a mean square error, while it is a cross-entropy loss for classification tasks. You can apply optimization algorithms such as gradient descent or Adam to reduce the loss; that is how the weights and biases get updated iteratively to enhance the model’s accuracy.

Refinement

The last step involves measuring the model’s performance using metrics such as inception score (IS) for image quality or Fréchet inception distance (FID) for the realism of GAN. Further optimization in performance is done through the tuning of hyperparameters like learning rate or batch size. If the results are unsatisfactory, then the data, model architecture, or training settings may need changes, and the cycle is repeated till the desired accuracy is attained.

Challenges Of Generative AI Models

There is no doubt that Generative AI has transformed the way data is processed. From analyzing a wide array of datasets to producing precise output, generative AI is paving the way for efficient operations. However, a major challenge that comes with these models is their accuracy and reliability, as they can contain certain biases that might not deliver accurate information. Let’s understand this better:

- Mode Collapse in GAN: A major challenge that GAN models face that when the generator can deceive the discriminator by producing only limited output. In case the former disregards the diversity in its training models which can lead to limited and repetitive output.

- Extensive training: Training Gen AI models requires extensive resource allocation. This means more developers and more experimental ability. Not every organization has these kinds of capabilities which can lead to big corporations overpowering the market.

- Ethical Concerns: Generative AI models are capable of producing highly fabricated content including deepfake and fake news. This can lead to the spreading of misinformation and malicious propaganda. Moreover, preventing and stopping misuse becomes difficult as AI models are still evolving.

- Interpretability: With Gen AI models, sometimes it becomes hard to determine why the generative models are producing certain outputs. These models operate in pretty complex, black-box architectures that lack transparency, so it is tough to explain how they derive any particular result. This becomes problematic in the cases of regulated industries especially, where explainability is of utmost importance.

Evaluation & Monitoring Metrics for Generative AI

Evaluating generative AI demands an appropriate mix of qualitative and quantitative metrics to ensure the system is indeed of value and fulfills its objectives. Quantitative measures include perplexity and BLEU scores, as well as FID (Fréchet Inception Distance), for measuring accuracy, fluency, or diversity in text or image generation. Domain-specific metrics such as ROUGE are used for summarization to measure relevance and precision while CER/WER is for transcription. This offers a yardstick in measurement against pre-defined datasets to ensure that the outputs yield quality by benchmarking performance.

Real-time monitoring is more about ensuring consistent performance and ethical outputs during deployment. Metrics like response time, system uptime, and error rates ensure efficient operations while other tools like bias detection, toxicity filters, and compliance frameworks must safeguard ethical standards. Regular user feedback and human evaluation can complement automated metrics to further refine the model continuously. The combination ensures that generative AI systems remain adaptive, trustworthy, and aligned with the needs of businesses and users.

Monitoring Metrics for Performance and Reliability

Real-time monitoring focuses on ensuring consistent performance and ethical outputs during deployment. Metrics like response time, system uptime, and error rates track operational efficiency, while tools like bias detection, toxicity filters, and compliance frameworks safeguard ethical standards. Regular user feedback and human evaluation complement automated metrics to continuously refine the model. Combining these ensures generative AI systems remain adaptive, trustworthy, and aligned with business and user needs.

Conclusion

Generative AI is gaining traction not only because of its innovative capabilities but also because it can improve efficiency to a great extent. Its flexibility enables businesses across industries to engage with customers efficiently improving their operations to a great extent.

Moreover, certain industries like finance, healthcare, and media are witnessing major positive impacts due to the seamless AI integration of these generative AI models. However, concerns surrounding their feasibility are still circulating mainly because of the rise of illicit activity like deepfakes. While this has been a good topic of discussion, understanding how these models operate will enable industry leaders to integrate them well into operations.

The future outlook of these models will thus depend entirely on how other industries will view them and how efficiently they will integrate them into their operations.

Healthcare App Development Services

Healthcare App Development Services

Real Estate Web Development Services

Real Estate Web Development Services

E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce Development Company

Blockchain E-commerce Development Company

Fintech App Development Services

Fintech App Development Services Fintech Web Development

Fintech Web Development Blockchain Fintech Development Company

Blockchain Fintech Development Company

E-Learning App Development Services

E-Learning App Development Services

Restaurant App Development Company

Restaurant App Development Company

Mobile Game Development Company

Mobile Game Development Company

Travel App Development Company

Travel App Development Company

Automotive Web Design

Automotive Web Design

AI Traffic Management System

AI Traffic Management System

AI Inventory Management Software

AI Inventory Management Software

AI Software Development

AI Software Development  AI Development Company

AI Development Company  AI App Development Services

AI App Development Services  ChatGPT integration services

ChatGPT integration services  AI Integration Services

AI Integration Services  Generative AI Development Services

Generative AI Development Services  Natural Language Processing Company

Natural Language Processing Company Machine Learning Development

Machine Learning Development  Machine learning consulting services

Machine learning consulting services  Blockchain Development

Blockchain Development  Blockchain Software Development

Blockchain Software Development  Smart Contract Development Company

Smart Contract Development Company  NFT Marketplace Development Services

NFT Marketplace Development Services  Asset Tokenization Company

Asset Tokenization Company DeFi Wallet Development Company

DeFi Wallet Development Company Mobile App Development

Mobile App Development  IOS App Development

IOS App Development  Android App Development

Android App Development  Cross-Platform App Development

Cross-Platform App Development  Augmented Reality (AR) App Development

Augmented Reality (AR) App Development  Virtual Reality (VR) App Development

Virtual Reality (VR) App Development  Web App Development

Web App Development  SaaS App Development

SaaS App Development Flutter

Flutter  React Native

React Native  Swift (IOS)

Swift (IOS)  Kotlin (Android)

Kotlin (Android)  Mean Stack Development

Mean Stack Development  AngularJS Development

AngularJS Development  MongoDB Development

MongoDB Development  Nodejs Development

Nodejs Development  Database Development

Database Development Ruby on Rails Development

Ruby on Rails Development Expressjs Development

Expressjs Development  Full Stack Development

Full Stack Development  Web Development Services

Web Development Services  Laravel Development

Laravel Development  LAMP Development

LAMP Development  Custom PHP Development

Custom PHP Development  .Net Development

.Net Development  User Experience Design Services

User Experience Design Services  User Interface Design Services

User Interface Design Services  Automated Testing

Automated Testing  Manual Testing

Manual Testing  Digital Marketing Services

Digital Marketing Services

Ride-Sharing And Taxi Services

Ride-Sharing And Taxi Services Food Delivery Services

Food Delivery Services Grocery Delivery Services

Grocery Delivery Services Transportation And Logistics

Transportation And Logistics Car Wash App

Car Wash App Home Services App

Home Services App ERP Development Services

ERP Development Services CMS Development Services

CMS Development Services LMS Development

LMS Development CRM Development

CRM Development DevOps Development Services

DevOps Development Services AI Business Solutions

AI Business Solutions AI Cloud Solutions

AI Cloud Solutions AI Chatbot Development

AI Chatbot Development API Development

API Development Blockchain Product Development

Blockchain Product Development Cryptocurrency Wallet Development

Cryptocurrency Wallet Development About Talentelgia

About Talentelgia  Our Team

Our Team  Our Culture

Our Culture

Healthcare App Development Services

Healthcare App Development Services Real Estate Web Development Services

Real Estate Web Development Services E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce

Development Company

Blockchain E-commerce

Development Company Fintech App Development Services

Fintech App Development Services Finance Web Development

Finance Web Development Blockchain Fintech

Development Company

Blockchain Fintech

Development Company E-Learning App Development Services

E-Learning App Development Services Restaurant App Development Company

Restaurant App Development Company Mobile Game Development Company

Mobile Game Development Company Travel App Development Company

Travel App Development Company Automotive Web Design

Automotive Web Design AI Traffic Management System

AI Traffic Management System AI Inventory Management Software

AI Inventory Management Software AI Software Development

AI Software Development AI Development Company

AI Development Company ChatGPT integration services

ChatGPT integration services AI Integration Services

AI Integration Services Machine Learning Development

Machine Learning Development Machine learning consulting services

Machine learning consulting services Blockchain Development

Blockchain Development Blockchain Software Development

Blockchain Software Development Smart contract development company

Smart contract development company NFT marketplace development services

NFT marketplace development services IOS App Development

IOS App Development Android App Development

Android App Development Cross-Platform App Development

Cross-Platform App Development Augmented Reality (AR) App

Development

Augmented Reality (AR) App

Development Virtual Reality (VR) App Development

Virtual Reality (VR) App Development Web App Development

Web App Development Flutter

Flutter React

Native

React

Native Swift

(IOS)

Swift

(IOS) Kotlin (Android)

Kotlin (Android) MEAN Stack Development

MEAN Stack Development AngularJS Development

AngularJS Development MongoDB Development

MongoDB Development Nodejs Development

Nodejs Development Database development services

Database development services Ruby on Rails Development services

Ruby on Rails Development services Expressjs Development

Expressjs Development Full Stack Development

Full Stack Development Web Development Services

Web Development Services Laravel Development

Laravel Development LAMP

Development

LAMP

Development Custom PHP Development

Custom PHP Development User Experience Design Services

User Experience Design Services User Interface Design Services

User Interface Design Services Automated Testing

Automated Testing Manual

Testing

Manual

Testing About Talentelgia

About Talentelgia Our Team

Our Team Our Culture

Our Culture

Write us on:

Write us on:  Business queries:

Business queries:  HR:

HR: